DML project members participated at the THATCamp British Library Labs, which took place on 13th February 2015 at the British Library. THATCamp stands for “The Humanities and Technology Camp”, that is an open, inexpensive meeting where humanists and technologists of all skill levels learn and build together in sessions pitched and voted on at the beginning of the day.

UPDATE: There is a report on the workshop at the BL Digital Scholarship blog.

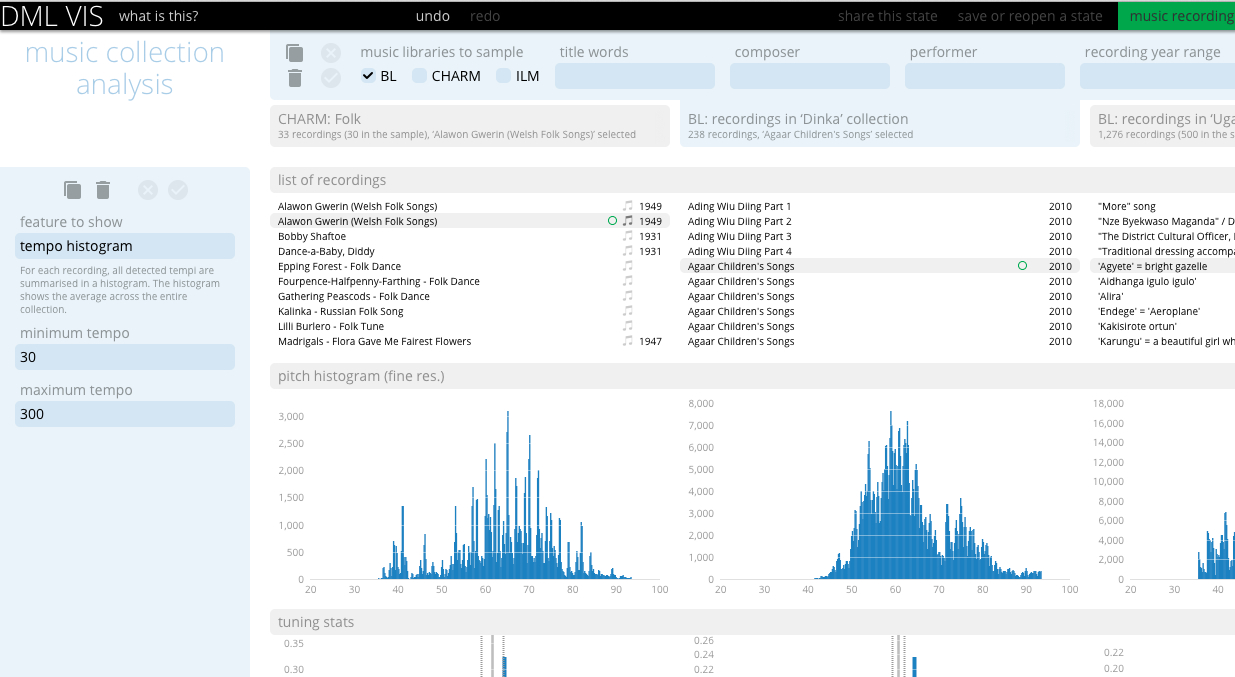

As part of the workshop, we proposed a session entitled “Big Data for Musicology“. The session was well attended by both technologies and humanists, and led to a useful discussion on user requirements and issues regarding the creation of a system for automatic music analysis. Some of the discussion/feedback is summarised below:

On user requirements from a “Big Data for Music” system:

– Search/organise music collections by spatial information

– Coming with a “definitive” version of a song that has many cover versions; extrapolating information from various historical performances, and coming up with a “median” performance, and comparing different performances using mathematical models.

– Audio forensics for performance analysis?

– There may be a role from expert users rather than relying on a large crowd. Targeting that community of experts. Crowdsourcing could be used in order to make links between data.

On the chord visualisations demo:

– It is interesting to see that there are groupings of chords in a particular genre.

– Useful for music education, where you can see where your music sits in terms of a specific genre. Also where a piece sits in the music market.

– Could you have a playback feature? Where one could play representative tracks with specific chord patterns. Also link with music scores.

– Browse genres/tracks by colour or shape or pattern?

On music-related games with a purpose and the Spot the Odd Song Out game:

– How did you promote?

– Seems difficult trying to compete in the games market – it might be easier to target smaller groups/expert users.

– Having a music-related game can be more difficult than e.g. an image-based on, since it at least requires headphones/speakers.